Is Robust Machine Learning Possible?

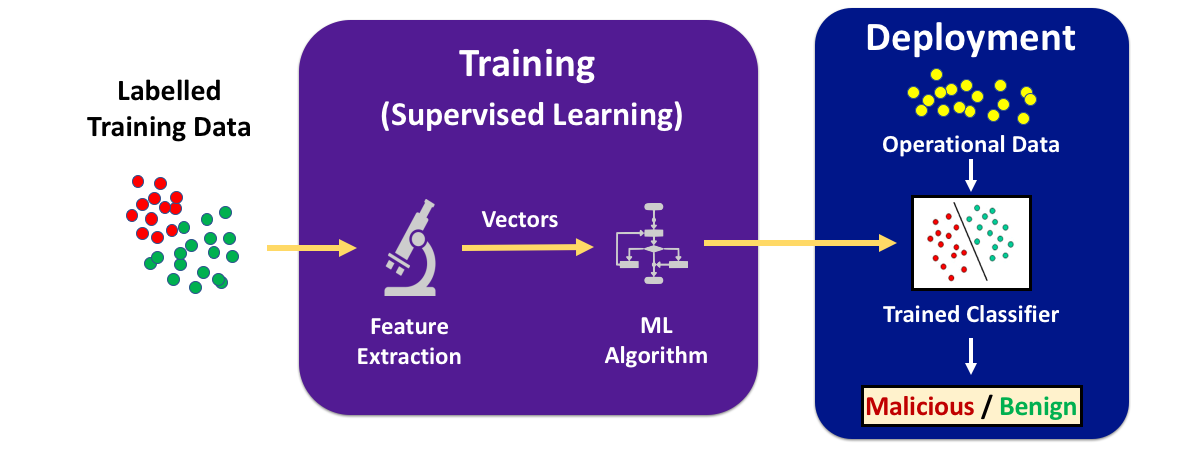

Machine learning has shown remarkable success in solving complex classification problems, but current machine learning techniques produce models that are vulnerable to adversaries who may wish to confuse them, especially when used for security applications like malware classification.

The key assumption of machine learning is that a model that is trained on training data will perform well in deployment because the training data is representative of the data that will be seen when the classifier is deployed.

When machine learning classifiers are used in security applications, however, adversaries may be able to generate samples that exploit the invalidity of this assumption.

Our project is focused on understanding, evaluating, and improving the effectiveness of machine learning methods in the presence of motivated and sophisticated adversaries.

Projects

Papers

Xiao Zhang and David Evans. Incorporating Label Uncertainty in Understanding Adversarial Robustness. In 10th International Conference on Learning Representations (ICLR). April 2022. [arXiv] [OpenReview] [Code]

Fnu Suya, Saeed Mahloujifar, Anshuman Suri, David Evans, and Yuan Tian. Model-Targeted Poisoning Attacks with Provable Convergence. In 38th International Conference on Machine Learning (ICML). July 2021. [arXiv] [PMLR] [PDF] [Code]

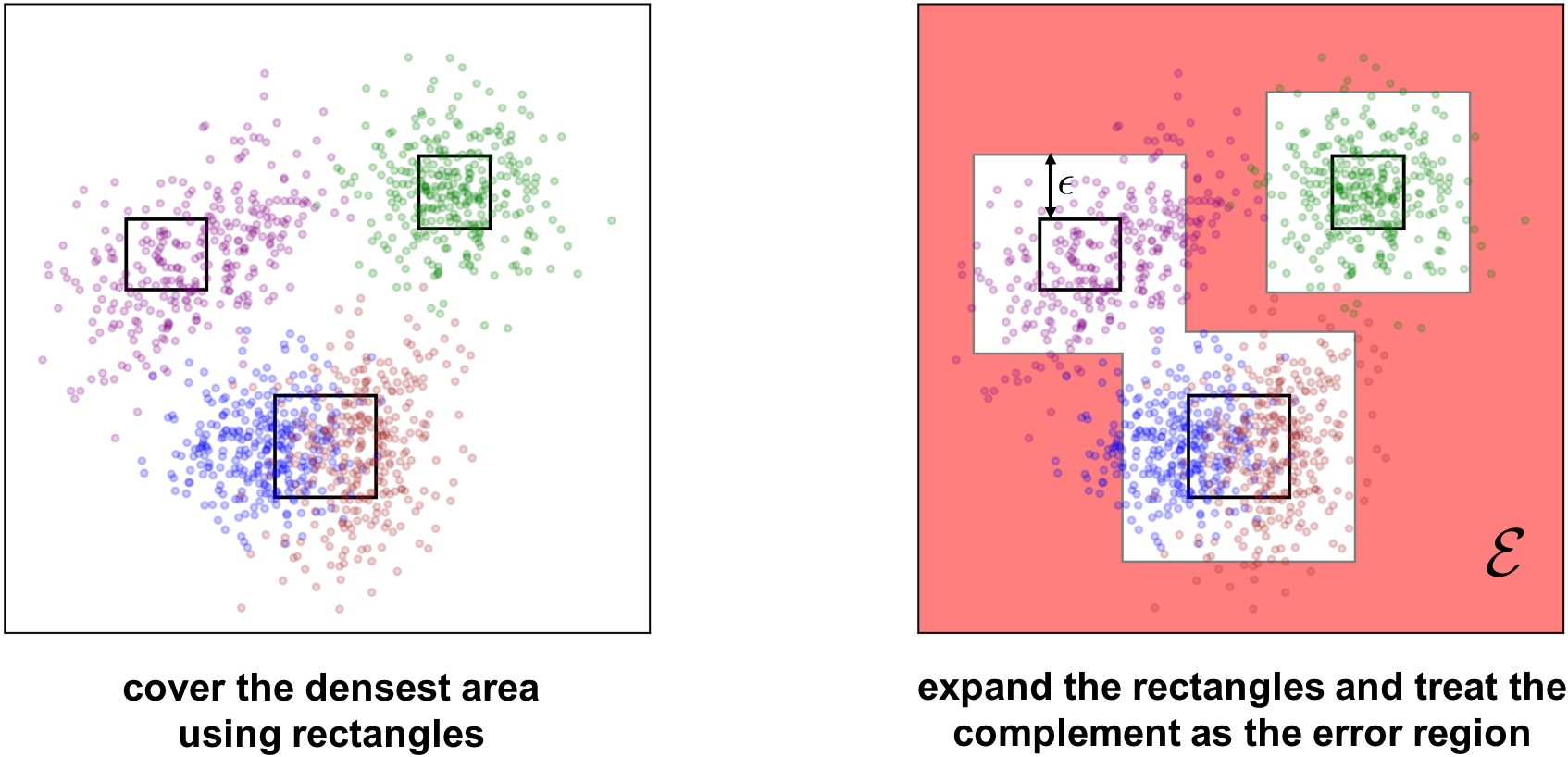

Jack Prescott, Xiao Zhang, and David Evans. Improved Estimation of Concentration Under ℓp-Norm Distance Metrics Using Half Spaces. In Ninth International Conference on Learning Representations (ICLR). May 2021. [arXiv, Open Review] [Code]

Fnu Suya, Jianfeng Chi, David Evans, and Yuan Tian. Hybrid Batch Attacks: Finding Black-box Adversarial Examples with Limited Queries. In 29th USENIX Security Symposium. Boston, MA. August 12–14, 2020. [PDF] [arXiV] [Code]

Saeed Mahloujifar★, Xiao Zhang★, Mohammad Mahmoody, and David Evans. Empirically Measuring Concentration: Fundamental Limits on Intrinsic Robustness. In NeurIPS 2019. Vancouver, December 2019. (Earlier versions appeared in Debugging Machine Learning Models and Safe Machine Learning: Specification, Robustness and Assurance, workshops attached to Seventh International Conference on Learning Representations (ICLR). New Orleans. May 2019. [PDF] [arXiv] [Post] [Code]

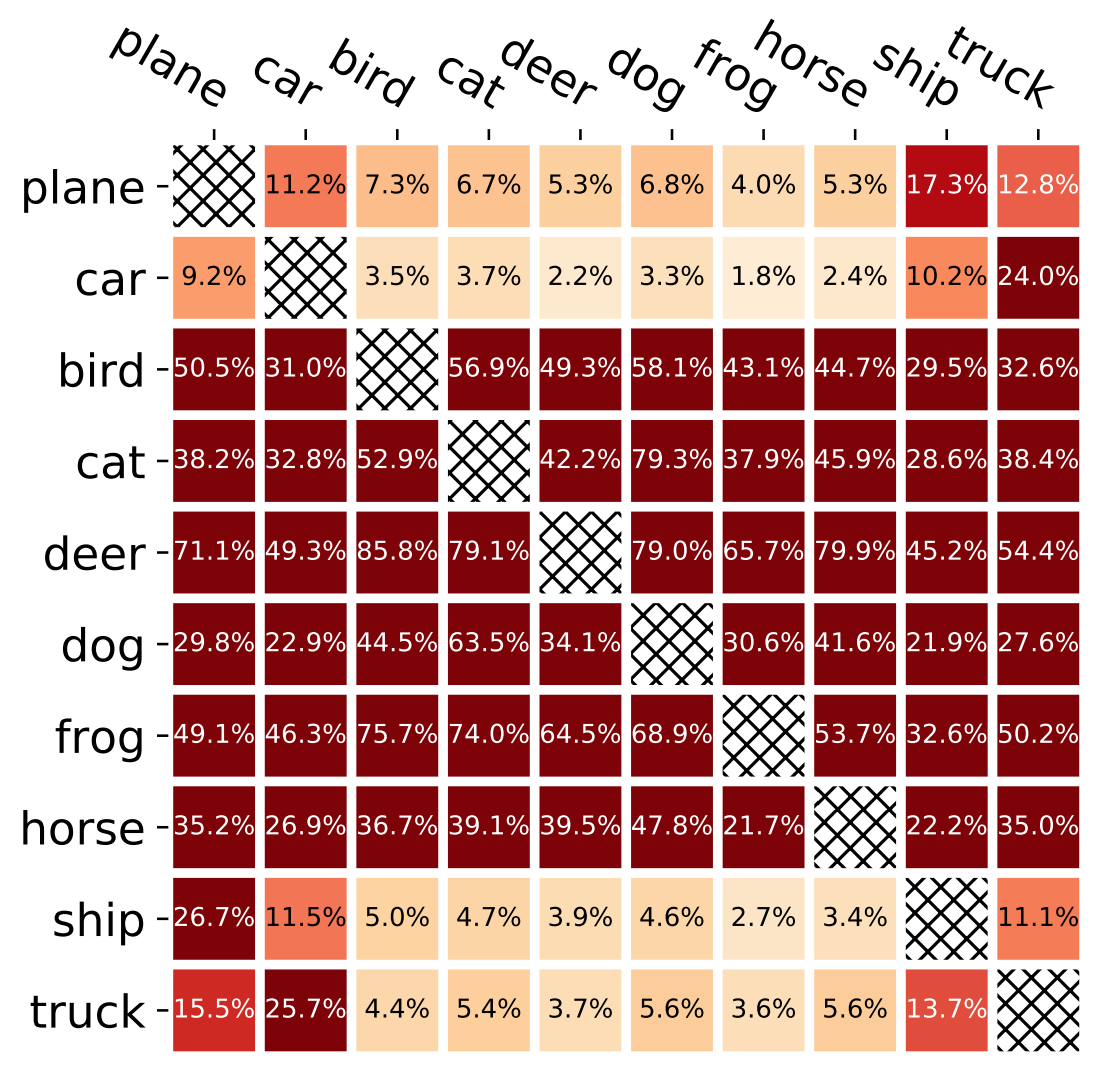

Xiao Zhang and David Evans. Cost-Sensitive Robustness against Adversarial Examples. In Seventh International Conference on Learning Representations (ICLR). New Orleans. May 2019. [arXiv] [OpenReview] [PDF]

Weilin Xu, David Evans, Yanjun Qi. Feature Squeezing: Detecting Adversarial Examples in Deep Neural Networks. 2018 Network and Distributed System Security Symposium. 18-21 February, San Diego, California. Full paper (15 pages): [PDF]

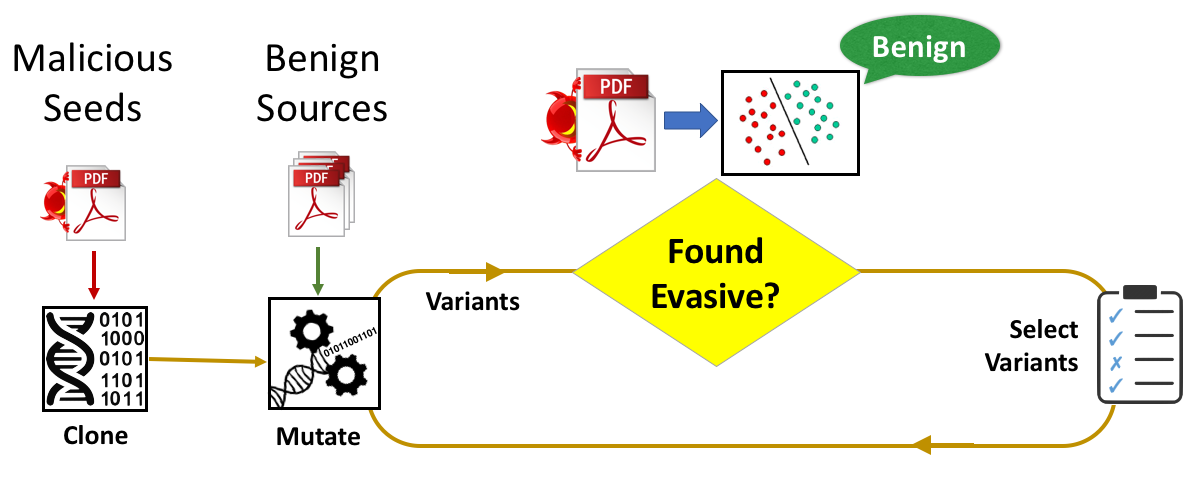

Weilin Xu, Yanjun Qi, and David Evans. Automatically Evading Classifiers A Case Study on PDF Malware Classifiers. Network and Distributed Systems Symposium 2016, 21-24 February 2016, San Diego, California. Full paper (15 pages): [PDF]

Talks

Understanding Intrinsic Robustness Using Label Uncertainty. Xiao Zhang’s talk at ICLR 2022.

Hybrid Batch Attacks. Fnu Suya’s talk at USENIX Security 2020.

Intrinsic Robustness using Conditional GANs. Xiao Zhang’s talk at AISTATS 2020.

Trustworthy Machine Learning. Mini-course at 19th International School on Foundations of Security Analysis and Design. Bertinoro, Italy. 26–28 August 2019.

Can Machine Learning Ever Be Trustworthy?. University of Maryland, Booz Allen Hamilton Distinguished Colloquium. 7 December 2018. [SpeakerDeck] [Video]Mutually Assured Destruction and the Impending AI Apocalypse. Opening keynote, 12th USENIX Workshop on Offensive Technologies 2018. (Co-located with USENIX Security Symposium.) Baltimore, Maryland. 13 August 2018. [SpeakerDeck]

Is "Adversarial Examples" an Adversarial Example. Keynote talk at 1st Deep Learning and Security Workshop (co-located with the 39th IEEE Symposium on Security and Privacy). San Francisco, California. 24 May 2018. [SpeakerDeck]

Code

Hybrid Batch Attacks: https://github.com/suyeecav/Hybrid-Attack

Empirically Measuring Concentration: https://github.com/xiaozhanguva/Measure-Concentration

EvadeML-Zoo: https://github.com/mzweilin/EvadeML-Zoo

Genetic Evasion: https://github.com/uvasrg/EvadeML (Weilin Xu)

Cost-Sensitive Robustness: https://github.com/xiaozhanguva/Cost-Sensitive-Robustness (Xiao Zhang)

Adversarial Learning Playground: https://github.com/QData/AdversarialDNN-Playground (Andrew Norton) (mostly supersceded by the EvadeML-Zoo toolkit)

Feature Squeezing: https://github.com/uvasrg/FeatureSqueezing (Weilin Xu) (supersceded by the EvadeML-Zoo toolkit)

Team

Hannah Chen (PhD student, working on adversarial natural language processing)

Fnu Suya (PhD student, working on batch attacks)

Yulong Tian (visiting PhD student)

Scott Hong (undergraduate researcher)

Evan Rose (undergraduate researcher)

Jinghui Tian (undergraduate researcher)

Tingwei Zhang (undergraduate researcher)

David Evans (Faculty Co-Advisor)

Yanjun Qi (Faculty Co-Advisor for Weilin Xu)

Yuan Tian (Faculty Co-Advisor for Fnu Suya)

Alumni

Weilin Xu (PhD Student who initiated project, led work on Feature Squeezing and Genetic Evasion, now at Intel Research, Oregon). Dissertation: Improving Robustness of Machine Learning Models using Domain Knowledge.

Xiao Zhang (PhD student, led work on cost-sensitive adversarial robustness and intrinsic robustness, now at CISPA Helmholtz Center for Information Security, Germany). Dissertation: From Characterizing Intrinsic Robustness to Adversarially Robust Machine Learning.

Mainuddin Ahmad Jonas (PhD student, working on adversarial examples)

Johannes Johnson (Undergraduate researcher working on malware classification, summer 2018)

Anant Kharkar (Undergraduate Researcher worked on Genetic Evasion, 2016-2018)

Noah Kim (Undergraduate Researcher worked on EvadeML-Zoo, 2017)

Yuancheng Lin (Undergraduate researchers working on adversarial examples, summer 2018)

Felix Park (Undergradaute Researcher, worked on color-aware preprocessors, 2017-2018)

Helen Simecek (Undergraduate researcher working on Genetic Evasion, 2017-2019)

Matthew Wallace (Undergraduate researcher working on natural language deception, 2018-2019; now at University of Wisconsin)